If you’re an Atlassian Marketplace app vendor or you’re exploring how to bring AI capabilities like Rovo into your products and services, this article is for you.

AI is no longer optional – and neither is compliance. What used to be the domain of a few highly regulated sectors like medical devices, pharma, or aerospace has now expanded into almost every industry. Finance, automotive, energy, government, healthcare, biotech, cloud enterprises, and manufacturing all operate under increasing layers of privacy rules, cybersecurity frameworks, safety standards, audit expectations, and now – AI governance requirements.

Your customers aren’t just curious about AI, they’re excited about it. But they’re also required to treat every AI-enabled tool they use as part of their compliance chain, not just a cool feature. That means they’re looking for traceability, auditability, safety controls, data-governance clarity, and strong AI oversight from every vendor they work with – including Marketplace app developers and AI solution providers.

So if you want your AI-powered software tool, Atlassian app or Rovo integration to be adopted in these environments or if you want to sell confidently into enterprises that have auditors and internal governance committees, you need to understand the expectations these customers now operate under.

This blog post walks you through the seven most important considerations AI tool developers must understand before offering their solutions to regulated industries. This article is based on a webinar SoftComply and Orthogonal hosted on November 12, 2025, where we broke down:

what regulated teams require from AI tools, and

what AI vendors must provide in order to meet those requirements.

This structure is designed specifically to help AI tool developers like Atlassian marketplace partners anticipate and answer the questions regulated customers are already starting to ask.

You can also watch our YouTube video summarizing the 7 considerations:

7 Key Considerations for AI Tool Developers in Regulated Industries

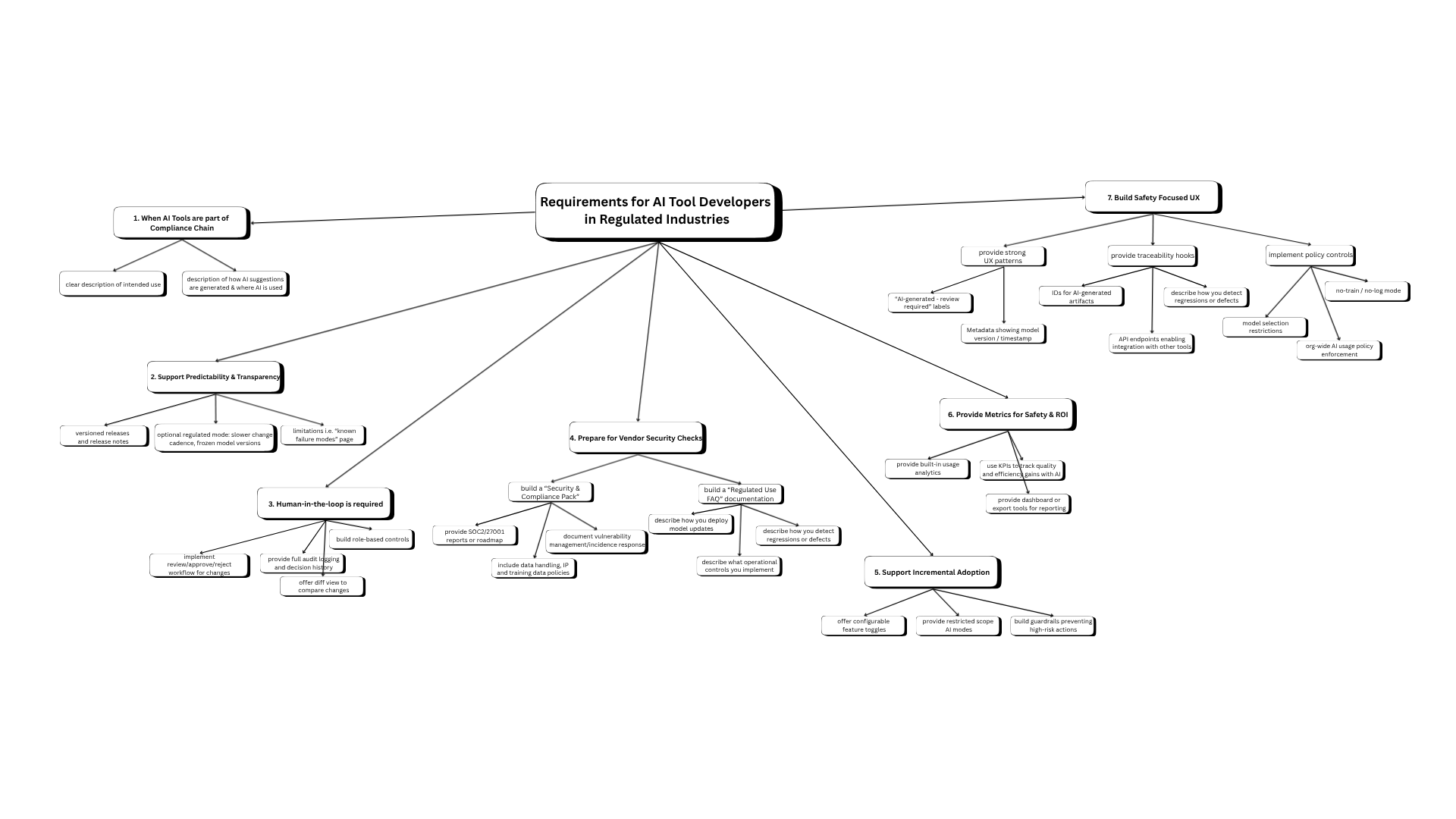

Here are seven critical considerations AI tool developers must understand when building and offering AI solutions for regulated industries.

These points also highlight why – even when an AI tool is technically excellent – regulated organizations cannot adopt it immediately.

1. AI tools aren’t “just tools” in regulated industries – they affect compliance

In regulated industries, an AI development tool isn’t just a convenience – it becomes part of the safety and compliance chain.

What regulated industry customers must do | What AI tool vendors should provide |

Regulated teams need to be clear about how the AI assists them (what is the intended use of the AI tool) and where the human remains responsible. They must think through situations where the AI could generate incorrect code, test cases, or risk controls, and ensure people, not algorithms, make the final judgments. |

This helps regulated customers correctly scope the AI’s role in their development process. |

2. Model freezing won’t always work – but predictability & transparency will

One of the biggest challenges for regulated industries adopting AI development tools is the pace of model evolution. LLMs update frequently, often silently, and sometimes in ways that change model behaviour. This is great for innovation but a nightmare for regulatory validation.

What regulated industry customers must do | What AI tool vendors should provide |

Instead of the impossible model freezing, regulated teams need to understand which model version was used at what time, and how changes in the AI might affect validation or safety-relevant work. If behaviour shifts or new features appear, they need to reassess risk, not assume the tool still works the same way. |

These elements help regulated customers show auditors that they understand — and can manage — change. |

3. Human-in-the-loop is mandatory – support it

In safety-critical and regulated industries, AI can assist – but it cannot decide. This is not just a best practice. It’s a requirement in various regulations and international standards they need to comply with.

What regulated industry customers must do | What AI tool vendors should provide |

Even the smartest AI cannot replace human accountability in regulated environments. Teams must review and approve anything the AI generates that may influence safety, risk, or traceability. They need a clear record of who reviewed what and why decisions were made — this is what auditors will look for first. |

If you build these controls into your app or platform, you immediately become more suitable for regulated markets. |

4. AI tool vendors are regulated suppliers

When an AI tool influences product quality or compliance, the vendor becomes a critical supplier.

What regulated industry customers must do | What AI tool vendors should provide |

Regulated teams must assess vendor maturity, transparency, data handling, change processes, and incident response — just like any other regulated supplier. They also need clear contractual terms about data use, especially around prompts and model training. |

The more you can “pre-answer” these questions, the easier it becomes for regulated teams to select your product. |

5. Support incremental AI tool adoption – not full autonomy

Regulated companies cannot adopt AI like consumer or enterprise teams do. Instead, regulated companies must adopt AI gradually – one workflow, one team, one risk level at a time.

What regulated industry customers must do | What AI tool vendors should provide |

Teams should start with low-risk use cases where AI helps but doesn’t decide — such as drafting tests or summarizing documentation. They need clear rules about where AI can operate and where it cannot, and they must enforce these rules through permissions and process boundaries. This approach ensures AI adoption grows safely and predictably. |

This helps regulated industry customers adopt AI safely, gradually, and in a controlled manner. |

6. Regulated Industries will ask about metrics – help your users show ROI

In regulated industries, teams cannot simply adopt an AI tool because it “seems helpful.” They must demonstrate, with objective evidence, that the AI:

1. improves productivity or quality, and

2. does not introduce safety or compliance risks.

What regulated industry customers must do | What AI tool vendors should provide |

Regulated teams using AI must be able to show that the tool improves efficiency without compromising quality or safety. They should track whether AI-assisted work produces fewer defects, better coverage, or faster cycles — and demonstrate that AI hasn’t introduced new risks. Metrics justify continued use and satisfy auditors that AI is helping, not harming. |

This turns your AI from “mysterious black box” into “measurable productivity tool.” |

7. Safety-focused UX matters – good boundaries make good AI tools

In regulated companies, UX of your AI tool is not cosmetic – it’s a safety feature.

What regulated industry customers must do | What AI tool vendors should provide |

Clarity is critical: users must be able to see what the AI generated, what humans authored, and what has been approved. Regulated teams need straightforward ways to track where content originated and how it flows across requirements, risks, tests, and documentation. Policies should define which data AI may access, and which artifacts it is prohibited from touching. |

These design choices make your AI feature adoptable across regulated industries. |

Closing Message for AI Tool Vendors

Let’s be honest, most AI tools used in software development today don’t meet any of these seven requirements.

But we cannot afford to wait until something goes wrong – until a device malfunctions, or a patient gets hurt, before we raise the bar.

If you can’t deliver all seven safeguards today, focus on the ones that matter most – the first three considerations:

Define the intended use clearly

Keep humans in control

Document model versions and behavior changes

These three are the foundation of trustworthy AI in regulated development. Do them right, and you’re already far ahead of most tools on the market.

An Example of AI Tool Audit-Readiness Checklist for Medical Device Developers

Following is a list of best practices in AI tool adoption and evidence the auditors may be looking for when auditing the Quality Management System of a Medical Device company:

Best Practice / Audit Question | Objective Evidence to Show Auditors | Reference / Source |

Have you defined and documented the intended use of each AI tool? | Tool Intended Use Statement describing purpose, boundaries, and human oversight. | ISO 13485:2016 §4.1.6 |

Have you classified each AI tool by its risk impact on product quality or safety? | Tool Risk Classification matrix (low / medium / high) with justification. | ISO/TR 80002-2:2023 §6 |

Is each AI tool validated for its intended use (not for perfection)? | Validation Plan + Test Results showing consistent, reproducible performance. | ISO 13485 §7.6 / ISO /TR 80002-2 §7 / GAMP 5 (2nd Ed.) §4.4 |

Are humans always in the loop for AI-generated content? | SOP or workflow requiring human review & approval of all AI outputs. | EU AI Act Art. 14 (Human Oversight) |

Can you trace each AI-generated artifact to its tool, version, and reviewer? | Audit log or metadata fields (tool ID, model version, timestamp, review signature). | ISO/TR 80002-2 Annex D |

Are AI tool and model versions locked and documented? | Version control records; “frozen model” evidence; revalidation trigger criteria. | ISO/TR 80002-2 §7.4 |

Is data, IP, and privacy protected when using AI tools (no uncontrolled data flow to vendors)? | Data-handling SOP, vendor agreements, and network architecture showing isolation. | ISO 13485 §4.2 |

Do AI tool vendors provide transparency (model card, update notes, limitations)? | Supplier file including AI Factsheet / Model Card / Changelog. | ISO 13485 §7.4 (Supplier Control) |

Do you monitor AI tool performance continuously and revalidate after significant change? | Ongoing monitoring logs, benchmark dataset results, drift analysis reports. | ISO/TR 80002-2 §7.4 |

Do you maintain a complete AI Tool Validation File? | One folder per tool containing: intended use, risk, validation plan, test results, vendor docs, version logs, approvals. | ISO 13485 §4.1.6 |